Brain Boost

The Power of AI

Welcome to Polymathic Being, a place to explore counterintuitive insights across multiple domains. These essays explore common topics from different perspectives and disciplines to uncover unique insights and solutions.

Today's topic shares practical insights and tips on using Large Language Models to conduct objective research on divisive and complex topics. We’ll dig into how AI should Augment Intelligence, not replace a human-centric investigation, and analyze a common critique about the reliability of AI-generated content.

Intro

Let’s be honest, the internet has been turning into The Enshittification of Everything, where Algowhores compete with Fear Porn, and forums explode with opinions that are like assholes; everyone has one, and most of them stink. So, how does one navigate that hellscape and extract useful information?

Well, if you haven’t been paying attention, AI has become a fantastic research tool for everything from cooking a brisket to wiring a house, to biohacking, psychology, and even treadmill repair, among other applications. And yet, it’s often overlooked by many people who have yet to realize the power of LLMs or is avoided by those who don’t understand or trust how it works.1

But HALLUCINATIONS!

Yes, when the Large Language Models (LLMs), like ChatGPT, first entered the market, they would produce errors. However, the accusation of ‘hallucination’ was misapplied. LLMs don’t hallucinate; they return inaccurate information because their job wasn’t to ensure factual accuracy per se, but rather to produce statistically accurate and linguistically complete outputs based on the data they were trained on. It was humans who were hallucinating about what the AI was supposed to be doing.

Very quickly, that nuance didn’t matter because the users of AI found ways to prompt the LLMs that reduced the errors, and AI companies released new versions with advanced reasoning and the ability to search the internet for updated information. Simply put, LLMs have overcome many of their original limitations and have become incredible research assistants for more complex questions.

A Personal Research Assistant

The other day, I was preparing to smoke a brisket. I’ve spent years perfecting my smoking, but there was one question I hadn’t answered well online because of the divergent perspectives: Should I separate the brisket point (thick part) from the flat (thin part) to reduce the smoke time?

Now, if you’ve spent any time online, you’ll know how divisive the opinions on the most mundane topics can be. Like the time I was going to run an electric subpanel to a workshop and was comparing copper wire to aluminum, and I tell you what, if you thought abortion or homosexuality was a hot topic… the extreme positions on wiring shocked me. A simple question on a forum sparked a conflagration of charged opinions, making it hard to determine whether it was absolutely fine or if I was going to face charges for burning the entire subdivision down.2 I bring up this example because I just asked ChatGPT o3, their model with advanced reasoning, the same question, and the result was incredible, readable, contextualized, and included sources with a neutral perspective. (Check it out here)

Back to my question on brisket. ChatGPT searched online, parsed the available blogs, forums, and recipes, and prepared a pro/con analysis. It concluded that, if timing wasn’t a limitation, it’s more fool-proof to smoke the entire brisket. I followed up by asking why it recommended smoking the meat fat side down, and the LLM explained it better than sorting through half a dozen websites, which have different opinions. (see Brisket query here)

It’s this sort of objective synthesis that I’ve used on many topics to combine, analyze, and summarize complex and often divisive issues such as:

The recent Israel, Iran, and US conflict.

A Deep Research review of Gary Brecka’s bio-hacking protocols

A linguistic review of the words Sympathy and Empathy and how these terms have evolved and have been misused (in prep for a future essay)

An analysis of a complicated, high-dividend ETF to understand investment risk.

LLMs have become an incredibly useful research assistant that I can deploy on more complex and nuanced investigations. It’s no different from what I do at work with my junior employees, as we review and distill the latest science and technology to build cool space-based systems. In fact, these LLMs have become a critical resource as part of that journey, as they provide an objective, third-party view of information. I not only allow my teams to use these tools, but I also encourage it and challenge them to work on more complex tasks.

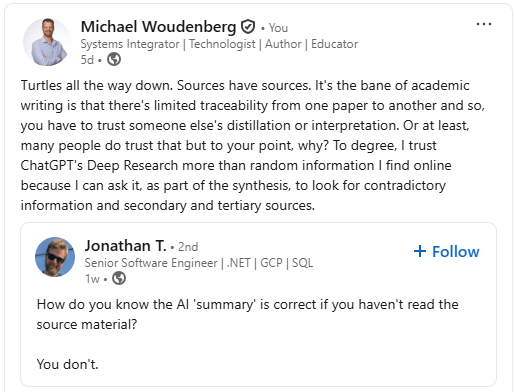

A common objection is epitomized in this recent LinkedIn post where Jonathan asks, “How do you know the AI 'summary' is correct if you haven't read the source material? You don't.” And yet, as I replied, sources have sources that link from one academic paper to another, each one interpreting, summarizing, and distilling the findings of dozens of other papers. The difference with the LLMs is that I can ask it to look for and include contradictory information as part of the analysis.

I also use LLMs like I’d use Google; I search for primary sources, review the information, verify the authenticity and credibility, and explore secondary and tertiary sources. I then incorporate new links and insights, providing them back to the LLM to explore, dive deeper into, or find contradictory information that refutes the findings. Using an LLM isn’t a once-and-done; it’s a conversation where I can challenge it, and I can have it challenge me. It can be a debate partner, a critic, and a researcher.

For context, one of the most dangerous aspects of human research is that many researchers bias their results to provide a person in authority with the answer they think they want, rather than the answer they need. An LLM can be the opposite of a ‘yes man’ and can also help you ensure your research teams aren’t inadvertently biasing the results. I take this step at work and have used the results to build more open relationships with my teams that foster constructive disagreement.

So, let's revisit the objection on trusting AI summaries: I don’t trust AI, but I do entrust it to distill, summarize, critique, counter, and challenge the data and my own perceptions and biases. Ironically, I also don’t trust human authors, but I do entrust them to share a perspective that I’ll evaluate with critical thinking.

The key here is that I’m using AI to augment my intelligence, not replace it. The words you read here are mine; the counterintuitive insights come from analysis, conversations with you, and the experiences of life. I find it necessary to apply that caveat because there’s a vocal minority who willingly uses Google, but balks at the idea of AI, viewing it as a replacement of the human, at worst, or cheating at best. As we explored in Artists vs. AI, their argument is as old as the written word, which Plato railed against for similar reasons. The argument continues today, and yet, what do we overlook in such a powerful tool?

The Polymathic Mindset - LLMs can help bridge the silos of excellence, where a simple addition to a query can be “review this topic against other domains and disciplines and find corollaries or contradictions.”

Challenging Bias - I’ve found great success in having an LLM provide a counterpoint to an argument I’m making. I’ve had it identify appropriate strawman arguments, examine where common logical fallacies might be leveraged against it, and look for serious critique.

Ensuring Logical Consistency - Am I being logical? Am I disprovable? LLMs can quickly compare your work to that of many others.

Research - We’ve covered some great examples thus far. Still, the power of LLMs to search, distill, and contextualize the growing pile of content on the internet is a great way to separate signal from noise, especially when coupled with the first three points above.

Summary

AI should mean Augmenting Intelligence, where using it intelligently requires a human-centric approach like I’ve modeled. Only the refusal to use it, or understand how much you already do use it, or using it to replace your human contribution or your critical thinking, is unintelligent.

Ironically, AI, used right, can enable better information, improved thinking, and greater human connection than sifting through the bowels of the internet that are populated by algowhores, fear porn, and the enshittification of information. This, I think, is one of the more counterintuitive insights regarding the power of LLMs.

If you’re interested in keeping up with the latest AI capabilities, I highly recommend following Daniel Nest who writes the fantastic Substack Why Try AI. Each week, he has a Sunday rundown with the latest advances, along with prompting tips and tricks.

Did you enjoy this post? If so, please hit the ❤️ button above or below. This will help more people discover Substacks like this one, which is great. Also, please share here or in your network to help us grow.

Polymathic Being is a reader-supported publication. Becoming a paid member keeps these essays open for everyone. Hurry and grab 20% off an annual subscription. That’s $24 a year or $2 a month. It’s just 50¢ an essay and makes a big difference.

Further Reading from Authors I Appreciate

I highly recommend the following Substacks for their great content and complementary explorations of topics that Polymathic Being shares.

Goatfury Writes All-around great daily essays

Never Stop Learning Insightful Life Tips and Tricks

Cyborgs Writing Highly useful insights into using AI for writing

Educating AI Integrating AI into education

Socratic State of Mind Powerful insights into the philosophy of agency

There’s a sizeable contingent emerging online with a complete zero-AI reaction. I’ve addressed that response in several previous posts and don’t want to exahust the conversation. But to avoid pointless accusations, I don’t use AI for my writing; I do use it for research, I use it to fact check, and I use it to analyze. Needless to say, this isn’t going to pacify the never-AI crowd who are ascribing to the ‘one drop rule’ of AI.

Fun fact: aluminum is fine, just don’t tell anyone.

“When used intelligently…”. Like any tool, you have people that know how to use it, others that injure themselves, and ones that are too scared to try.

Wonderful essay.